Nine best productivity apps to optimize your workflow

Kick your work into overdrive with our nine best productivity apps

While over half of respondents to a Lockdown Lessons survey commissioned by TalkTalk reported that they were more productive working from home, for many of us, working online all day also comes with plenty of distractions that weren’t as prevalent in office life.

When you’re working across multiple applications and software platforms, with email notifications constantly popping up and a thousand tabs open in your browser, it can easily feel as if you’re being productive when you’re really just jumping between windows and completing tiny tasks that don’t require as much focus.

The workers' experience report

How technology can spark motivation, enhance productivity and strengthen security

Add to this the potential for screen fatigue and the temptations to check Twitter when you’re working in your home office or living room all day with no one holding you accountable, and you have a recipe for much more stress down the line when the real work piles up.

Fortunately, there are a variety of productivity and time-management apps that have come to the market recently that can help improve your workflow and keep you on task.

These apps cater to a wide range of needs, with some focusing on collaboration and task management and others blocking websites and monitoring online habits for those that need a stern hand.

To find the right app for optimizing your workflow requires that you first understand what types of productivity tools are available to you.

Here we’ll break down some of our favorite productivity apps to help you make the right choice. Our top picks are separated into three categories of common productivity issues—workflow organization, improving working habits, and increasing focus—so you can skip to your main pain point, or read through all three.

Let’s get started.

Best productivity apps for workflow organization

These three productivity apps are here to help you keep your workday in order and get through it minimal wasted time.

Serene

Cost: $4/ £2.90 per month (10-hour free trial available)

Platforms: macOS

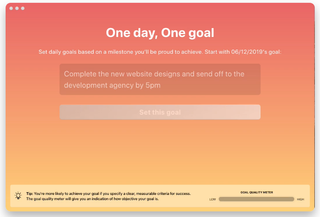

Download the Serene app and experience a wealth of productivity superpowers at your fingertips. Designed to assist remote workers, freelancers and hybrid teams, Serene takes a unique approach to helping its users optimize workflow.

The app works by prompting users to set a single daily goal and then breaking this goal down into more specific action items, Serene helps users stay on track no matter what the day brings. Instead of being distracted by a myriad of tasks, the Serene app empowers you to focus on tasks that help you achieve your daily objectives.

What other useful tools does Serene offer those looking for a bump in productivity? A website blocker designed to work with Serene’s app blocker, a session planner and a phone silencer so you can truly bid farewell to pesky distractions.

Evernote

Cost: Free version available or $7.99+/£4.99+ per month for premium plans

Platforms: Windows, Mac, iOS, Android, Chrome and Safari

Evernote is a note-taking app with a virtually endless number of uses. You can use this app to take notes, store your most important ideas and collaborate on projects across teams. This is just a small sampling of the many features Evernote comes with.

Consider one of Evernote's most notable features: its search capabilities. Search for a word or phrase within the app, and it will scour stored text and look for images featuring the words you’ve searched for.

Slack

Cost: Free version available or $6.67+/£5.25+ per user per month for paid options

Platforms: Windows, Mac, iOS, Android and web

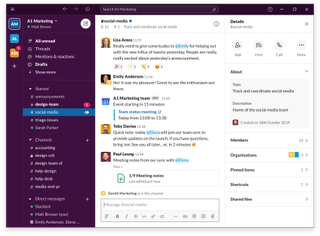

Slack is the ideal communication tool for collaborative teams and remote workers. Used by small and large businesses, Slack brings teams together regardless of each individual’s location so distance doesn’t force you to compromise productivity.

While Slack organizes instant messages into channels, team members can also chat outside of main topics and in separate threads so you can keep all of your conversations in one place. Additional features, such as integrated file sharing, allow users to drag and drop PDFs, images, videos and other files directly into Slack.

With its many useful tools and its ability to integrate with up to 2,000 apps, Slack’s collaborative software will have your team getting more done in no time.

Best productivity apps to improve your daily work habits

With these three productivity apps, you can develop healthy working habits, including remembering to stay off social media and remembering to step away from your computer once in a while.

Strides

Cost: Free with in-app upgrades

Platforms: iOS

Strides is unique in that it can be used to track just about anything. This flexibility makes it the ideal pick for those hunting for an app geared toward improving a multitude of daily habits.

Whether you need reminders to sneak away from the blue light of your computer screen for a few minutes each day or find yourself forgetting to check in with specific teammates, Strides sets targets and helps you manage your way to better daily habits.

HabitHub

Cost: Free

Platforms: Android, iOS coming soon

HabitHub’s ultimate goal is to be the only app you’ll ever need to improve your habits and achieve your goals. Fortunately, with its many useful features and intuitive interface, the company isn’t far from achieving its goals.

By combining a system of goals, rewards and statistical feedback, HabitHub provides its users with several graphs and charts to track progress over time. The app also gives users the ability to reward themselves for a job well done, making it all the more enjoyable to use.

Done

Cost: Free version available or $8.99/£8.99 per month for premium version

Platforms: iOS and Android

Done is the habit-development app that takes simplicity to the next level. From the moment you open the app, you’ll find yourself breathing a sigh of relief. From its aesthetically pleasing design to its smooth user experience, there’s something genuinely satisfying about Done.

By helping users set goals and tracking their progress, Done motivates users through streaks and creating chains that reflect the number of goals you’ve completed. As Jerry Seinfield has shared in his own productivity endeavors, “After a few days you'll have a chain. Just keep at it and the chain will grow longer every day. You’ll like seeing that chain, especially when you get a few weeks under your belt. Your only job next is to not break the chain.”

Best productivity apps to increase your focus

Stay laser focused on the task at hand with these three productivity apps.

Freedom

Cost: From $6.99/£6.49 per month or $29.04/£26.99 per year (seven-session free trial)

Platforms: Windows, Mac, iOS and Android

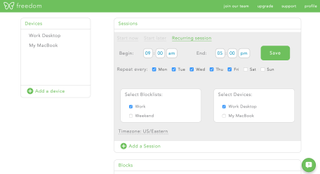

According to Freedom, you lose 23 minutes every time you check an email, scroll social media, respond to a notification or take part in any other workflow-breaking distraction. This lost time comes at a serious cost.

This is where Freedom comes in. By blocking distracting websites and apps, Freedom helps you focus on what matters most: work.

With Freedom, you’ll break time-wasting habits and build new habits that include extended periods of focused work time.

RescueTime

Cost: Free version available or $12.00/£11.99 per user per month for paid plans

Platforms: Android, iOS, Linux, macOS and Windows

RescueTime is far from subtle. RescueTime not only cuts off your access to distracting websites, but it also tracks how much time you spend working in different apps and sites.

By tallying up how you spend your time on your devices, RescueTime creates detailed reports that show time spent on work-related sites and apps versus time spent on more distracting sites.

Further, RescueTime also generates detailed reports about what types of apps and sites you tend to use during different times of day. Because everyone defines "work" differently, you will have to classify rules as to what’s productive and unproductive activity before getting started.

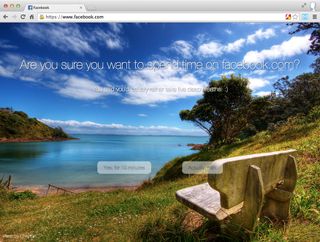

Mindful Browsing

Cost: Free

Platforms: Chrome

Mindful Browsing takes a different approach to helping its users rein in their online activity. While RescueTime blocks websites, Mindful Browsing asks if you’re sure you want to proceed to the sites you’ve deemed as unproductive. Fortunately, if you do click through to such a site, Mindful Browsing will remind you to get back to work after 10 minutes.

When it comes down to it, Mindful Browsing is a simple piece of software, but its line of questioning enforces a dose of self-restraint, which is sure to help you develop better habits in the long run.

Finding the best productivity apps for your needs

No matter how strong your work ethic might be, you probably face a long list of distractions every day, all of which cut into your productivity.

Fortunately, there’s a long list of productivity tools out there. While the nine apps listed above are our favorites, finding the most productive workflow for your needs takes the right combination of tools.

If you’re committed to putting yourself on the path to productivity, we suggest taking each of these productivity tools for a test run. Try them in combination with one another, find what works best for you and be on the way to a productive workday.

Get the ITPro. daily newsletter

Receive our latest news, industry updates, featured resources and more. Sign up today to receive our FREE report on AI cyber crime & security - newly updated for 2024.