Best mobile device management (MDM) solutions 2022

What are the best enterprise MDM solutions available for securing your corporate devices?

Mobile device management (MDM) is one of the most important business tools used by UK organisations. This is a system that helps IT administrators to secure and control tablets, smartphones, and computers used by employees in an organisation.

Thanks to new and modern initiatives like hot-desking and bring your own device (BYOD), and obviously the recent surge in remote working, MDM has become more and more popular in recent years. In particular, COVID-19 has led to a surge in the demand of this tool among businesses looking to take on MDM solutions. As the world of work is currently transforming into a hybrid model, it is probable this demand will continue or even grow, with UK businesses aiming to ensure endpoints distributed across their networks stay secure.

It is crucial for GDPR compliance to ensure your employees can only access the data they need, and that they are restricted from accessing other information. After all, organisations will be aiming to remain as compliant as possible to reduce the chance of getting a fine. Two ways that MDM systems can help businesses on their compliance journeys is by locking data if a device goes missing, or restricting data access for employees.

In addition to smartphones, MDM systems can manage laptops, tablets, and desktop PCs. This makes them extremely useful as they can manage cyber security risks across all workplace devices. For example, malware can be downloaded inadvertently in various ways, like through phishing attacks, and infects a number of devices regardless of their form. The best MDM systems out there will manage various devices at once, wiping or treating those infected with malware before the infection has time to spread.

Whatever your needs are from an MDM solution, there are many options available from a variety of providers. Here's a round-up of some of the best options, including offerings from IBM, Citrix and more.

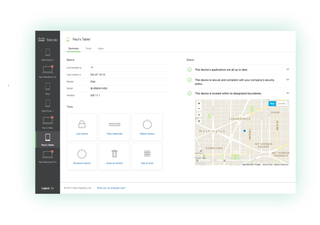

Cisco Meraki

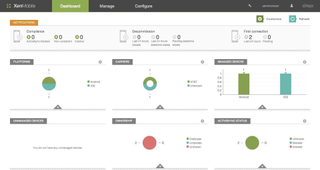

Perhaps one of the most well-known MDM platforms, Cisco Meraki allows you to manage every type of device in your business from one dashboard. No matter what you need to monitor - be it Android and iOS smartphones, or Linux, macOS and Windows PCs - you can do so. There's also an app for managing devices while not at your computer, monitoring usage and making sure all data is safe and sound.

The ultimate guide to going mobile for fire/emergency medical services

Get your free guide to going mobile for fire services and EMS

Cisco Meraki offers a bumper feature set, enabling you to enforce device security policies, deploy software and apps, and perform remote troubleshooting if any problems arise, monitoring calls and more on the devices across your network. Every device managed and monitored is regarded as a separate device, even if they're linked. This means you can, for example, allow certain apps to run on an employee's iPad but not on their linked smartphone.

Get the ITPro. daily newsletter

Receive our latest news, industry updates, featured resources and more. Sign up today to receive our FREE report on AI cyber crime & security - newly updated for 2024.

Cisco Meraki enables all of this to happen over the network, so even if you're trying to manage remote employee devices, it's a breeze. You can keep tabs on everything without anyone needing to be on the same network. It’s also easy to test-drive the platform with a browser-based demo with slated network devices and users. Plus, you can request trial hardware and get technical support to help with setup.

Pricing: Available upon request

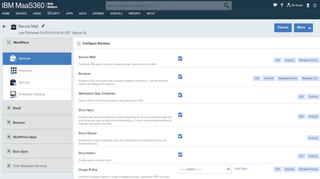

IBM MaaS360

IBM entered the MDM market following its acquisition of Fiberlink Communications back in 2013. Since then, Big Blue has been making some big improvements to its flagship MDM product. The service, which is powered by Watson AI, will easily integrate with existing IT infrastructure, enabling you to manage a diverse, complex endpoint and mobile environment.

IBM Maas360 also puts security at the forefront, securing and containing data accessed by users and keeping corporate apps and content separated while allowing for easy removal and access revocation. Its integrated threat defence also proactively shields corporate data.

IBM might not be the cheapest out there - especially since some of the services that you'd find bundled with other providers come at an additional cost - but with IBM's lengthy experience in enterprise security, you know you're getting a good quality solution for your money.

Pricing: From $4.00 (£3.32) per client device per month

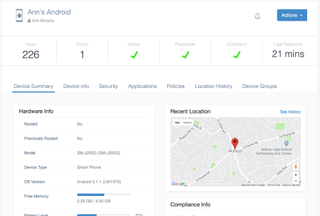

Hexnode MDM

Hexnode MDM lets you provision and manage devices, and prides itself on a user-friendly design. Users can add their own devices by connecting to the network or by using a portal installed on your company's website or intranet. Their device will then be added using their Active Directory credentials.

Once the devices are added, you can manage them whether they're connected to the corporate network or being used remotely. That means you can push configuration settings to the device, restrict functionality, manage mobile applications (including blocking App Store downloads and implementing a black/whitelist), check and enforce compliance and even remotely lock and wipe devices.

Hexnode MDM also offers a 30-day free trial if you want to get give it a test run.

Pricing: From $1.08 (90p) per device/month

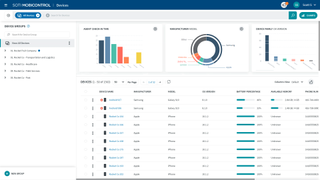

Soti MobiControl

Soti allows you to manage Android, iOS, Linux, macOS, and Windows devices from one place, for the entire lifecycle of the device within the organisation. They can be provisioned when first added to the company's fleet of devices, managed throughout their service and then wiped when it comes to retirement.

The platform was designed for use with ruggedised devices often used by fieldworkers and the healthcare, logistics, retail, and transport sectors.

The MDM platform can be installed on-premise or deployed on Soti's cloud. You can add devices to the platform using its Express Enrollment feature, which automatically delivers the settings, apps, and files a user needs over the air to get them up and running.

Pricing: Available on request

Citrix Secure Private Access

Citrix Secure Private Access, formerly known as Citrix Endpoint Management, is a zero trust network access tool with MDM functions. It allows the containerisation of business apps and personal apps, making it best suited to BYOD workplaces. Every device, including desktop PCs, smartphones and tablets, can be managed from one centralised console and devices don't even need to be enrolled to benefit from MAM, too.

As well as allowing the device owner to use a device provisioned and managed by the organisation, Citrix Secure Private Access has also been designed to let multiple users have access to one mobile device. This is particularly useful for industries such as healthcare, where field workers and emergency service workers may need shared ownership of one device.

However, it's likely one device will need applications and service provisioned with different access rights and this can be set up simply with Secure Private Access's MAM capabilities. IT managers can also lock down the device depending on the network location, stopping staff from using certain features outside of the corporate network.

Pricing: From $3 (£2.52) per device/month

VMware Workspace ONE

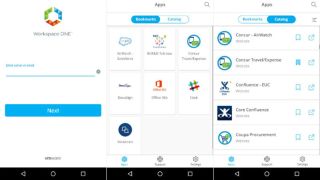

VMware Workspace ONE (formerly called Airwatch prior to its VMware acquisition) offers endpoint protection for all devices, regardless of the operating system, with full device management, whether it's a BYOD or shared corporate device.

You are able to deploy and manage any app via the platform's app catalogue, whether employees are trying to access them natively, on-device, via the web or remotely. The layered security across the individual user, endpoint, data, and network can all be centrally managed using the same mobility platform too.

Workspace ONE uses automation to carry out many everyday MDM tasks, which reduces the strain on IT staff. You don't have to manually provision or enrol devices - this happens without the need for any manpower, making it a perfect option for resource-stretched businesses.

Pricing: From $1.66 (£1.39) per device/month

The future of mobile device management

With life now beginning to return to offices across the world, it’s never been more important to ensure your organisation’s workers have great security. It’s likely that this will keep being seen by IT departments as one of their biggest challenges too.

Part of this is because of how popular hybrid working has become in recent times. There have been a number of attempts to make this the mainstream, including by the likes of Salesforce, which decided in February 2021 to offer employees the choice of three models for working. These were office-based in San Francisco, flex, or fully remote. This is a big step considering that approximately 18% of employees at the company were fully remote before the pandemic, compared to 50% by June 2021.

Some companies have gone an extra step, such as Airbnb, which told employees in April 2022 they can work from “anywhere”, regardless of the country they live in. The company’s new remote work policies stated that employees could also enjoy up to 90 days of work abroad.

Despite these benefits, combining in-office working with remote might be problematic when it comes to security. This is because the lines between what constitutes a home or work device inevitably blur, even if the organisation provided the employee with dedicated hardware. Importantly, it also involves workers regularly accessing their private networks at home, with the risk of falling victim to a wider variety of threats, and even transferring malicious software over to an organisation’s network when they work from the office. Companies not only have to think about adopting a clear BYOD policy, but also be extra vigilant when it comes to the security of their network.

With more employees making their way back to company property, even in a hybrid work model, MDM solutions are going to have a big role to play not only when it comes to security, but in GDPR compliance too. Bear in mind, however, this can come at a high cost. Decide which MDM option would be best for your business before investing anything. It’s also best to think about the scalability and price of the software, how good its security is, and how many devices or employees it’s able to support. Obviously, there are other things you’ll need to bear in mind before buying your ideal MDM solution, but this is a good place to start.

Of course, it would also be helpful to devise the appropriate MDM business plan on whether you are likely to see an ROI. However, security should be of paramount importance to any business. Failing to protect data can be catastrophic for a business, so there's no excuse not to take every measure to make sure your business stays afloat.

Connor Jones has been at the forefront of global cyber security news coverage for the past few years, breaking developments on major stories such as LockBit’s ransomware attack on Royal Mail International, and many others. He has also made sporadic appearances on the ITPro Podcast discussing topics from home desk setups all the way to hacking systems using prosthetic limbs. He has a master’s degree in Magazine Journalism from the University of Sheffield, and has previously written for the likes of Red Bull Esports and UNILAD tech during his career that started in 2015.