Best Windows 10 apps

We round up the best programs and apps to get you started on Microsoft's most recent OS

Everything is an app these days and for the biggest technology companies, app libraries are huge assets. From Apple's App Store to Google Play, having a place to purchase and download apps is an essential part of their services.

Microsoft has its own app store where Windows 10 apps can be downloaded on to any device, whether that's a smartphone, a hybrid machine, a laptop, a desktop or even your Xbox, the apps work in pretty much the same way and making it probably the most flexible computing platform around.

What's interesting about this is that the core architecture of Windows 10 is the same across all devices, re-scaling apps work pretty seamlessly and there are lots to choose from. Despite the fact it looks and acts very differently from its predecessor. Also, most Windows 8 apps can still be used as they were on the legacy platform.

Instead of requiring all apps to be re-built, Microsoft allowed developers to port their existing apps across and tag on extra Windows 10 functionality. As they were deployed. Which means that if you've got an app, that you love in Windows 8, or Windows 8.1, you'll be chuffed to see the UI and way it works hasn't changed since its move.

There's a wide selection of apps and desktop programs for you to start using with Windows 10, and we've rounded up the best offering available right now. This list will be updated with new entries, as the Windows 10 library continues to grow.

Getting started

A handy guide for new users, Get Started uses slideshows and video tutorials to walk you through the new platform.

It's a useful feature considering the new OS was released in July 2015, and represents quite a departure from operating systems like Windows 7, which many are more familiar with.

Just type Get Started into the search bar to pull up the app and scroll through the tabs on the left to pick a feature you want to learn about.

Adobe Photoshop Express

Price: Free

If you don't want to splash out on the full version of Adobe PhotoShop, Adobe PhotoShop Express is a great free alternative that will help you edit and refine pictures. It's been specifically designed for touchscreens, including mobile devices, tablets and notebooks, with an intuitive interface.

If you just want to crop or resize images, it's pretty straightforward to do so, by using a few taps and touches with your finger. There are a few different filters and looks you can add to pictures and there are plenty of other refinements, including flip and straighten photos, remove red-eye, add a vignette or remove spots and blemishes available for touching up photos quickly. If you're pushed for time, you can just apply all the auto settings to improve your pictures with as little effort as possible.

Of course, it's no replacement for the full version of the program, but if you just need to use photo editing software now and again and don't need to do anything too advanced, it's a must-download for your Windows 10 device.

For access to the best features and to link your photo library to the application, you'll be prompted to set up and Adobe ID or login using your Facebook or Google account, but it's no burden and means everything is saved in one place.

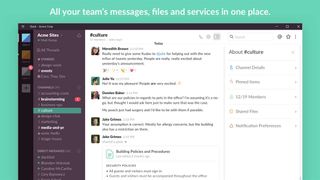

Slack for Windows 10

Publisher: Slack

Price: Free

Slack is one of the most popular communication and collaboration services on the internet. It allows team members to chat with each other in real time and offers a more productive experience over the humble email.

You can share and edit documents and collaborate with the right people all in Slack. It integrates into your workflow, the tools and services you already use including Google Drive, Salesforce, Dropbox, Asana, Twitter, Zendesk, and more.

Dropbox

Publisher: Dropbox

Price: Free

Dropbox is one of the most popular cloud storage services. It enables you to take photos, docs, and videos anywhere and share them. Any file saved to Dropbox can be accessed from any computers, phones, tablets, and on the web.

Pictures and videos can be viewed as a grid, while documents can be viewed as a list. First-time users signing up to the service get 2GB of free storage, which can be upgraded for free by completing a number of tasks (such as referring friends) or alternatively you can pay real money for extra storage.

OneDrive

Publisher: Microsoft Corp

Price: Free

OneDrive is Microsoft's flagship cloud storage app. Compatible with your Windows 10 PC, Windows Phone, Surface tablet, and other devices, it allows you to store your files and sync them across all of your devices. So whether it's crucial Word, Excel or PowerPoint documents for work, a selection of MP3s for an upcoming trip, or those holiday photos you want to share with friends and family, OneDrive can help you. It also integrates with Office 365, and offers additional features to business users.

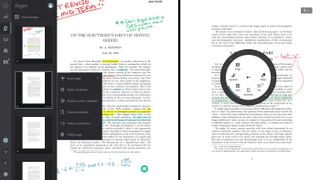

Drawboard PDF

Publisher: Drawboard

Price: 9.99 incl. VAT

Drawboard PDF is an app supported on Windows 10 which allows the user to open, read and save multiple PDF documents as well as create new PDFs directly from the app. Through the app, the user is able to markup and annotate these PDF documents in ways such as underlining, highlighting, writing, or even drawing directly on the PDFs by using either a stylus or their finger. The app also lets you bookmark, print, search, and share PDFs with others, making both finding a specific PDF and working collaboratively on one much easier.

Additionally, with in-app purchases, you can upgrade to the Drawboard PDF Pro which gives the user even more ways to work with PDFs with features such as the Document Builder, Calibrated Annotations, Linear and Area Measurements, a Surface Dial compatible Protractor and Ruler, and Grid and Line templates and overlays.

Publisher: Flipboard, Inc

Price: Free

Flipboard allows its users to create their own personalised magazine to catch up on the news they are interested in as well as browse articles, videos, and photos their friends are sharing.

It works by letting the users search for and save topics that interest them such as people, topics, hashtags, blogs. They can also search for and save sources they enjoy such as People magazine or The New York Times. Once their interests are saved, Flipboard puts it all in one easy to access place.

The app also allows the user to connect with friends by sharing articles, videos, and photos through social media networks like Facebook, Instagram, and Twitter.

Microsoft Edge

In terms of Killer apps, Microsoft Edge is the new big-ticket item. Replacing Internet Explorer as the default Windows browser, Microsoft has put a lot of effort into sprucing up Edge for the new, internet-centric generation.

One of the most obvious changes is a new visual design, which blends the sparse, stripped-down appearance of Windows 8's touch-optimised Internet Explorer with the added utility seen in rivals like Chrome and Firefox, along with unique annotation tools.

Elsewhere, it's sporting a built-in Pocket or Instapaper-syle reading list, which allows you to save interesting articles for later perusal. There's also a new Reading View, which breaks down and reformats the page to give a basic, uncluttered view of whatever article you're reading.

As a default browser, it's a massive step up from Internet Explorer, and Microsoft now has a viable rival to other companies' more impressive offerings.

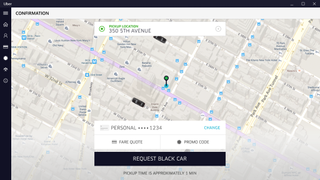

Uber

Publisher: Uber Technologies Inc.

Price: Free

Uber has arrived on Windows 10 PCs thanks to the Universal Windows Platform, which lets developers build an app that works across different types of devices.

You can pinpoint your location on your desktop app to request a driver to pick you up, or, if you're too busy, you can just request Cortana to request a cab for you, by saying: "Hey Cortana, get me an uberX to [name an address here]".

If you're already working on your Windows 10 device, it means there's no need to pull out your phone to book a ride.

Additionally by pinning the app to your Start menu, Uber will count down the estimated time of arrival of your taxi.

OneNote

Publisher: Microsoft Corporation

Price: Free

As one of the comparatively recent additions to the Office stable, OneNote is often - unfairly overlooked as a basic note-taking tool. In reality, it can have a surprising amount of functionality outside this.

There's a full suite of drawing tools for use with touchscreens and styluses, so you can handwrite notes as well as type them. In addition to this, you can also record audio via your device microphone and link it to specific sections of your notes for easy reference.

Be warned though the desktop Office 2013 version of OneNote is the one to go for. The app version that comes preinstalled with many Windows machines has its uses, but it's severely lacking in functionality compared to its big brother.

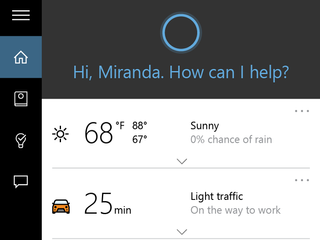

Cortana

Microsoft's answer to the recent wave of digital assistants like Siri and Google Now, Cortana is now built into Windows 10 on desktops. Like all DAs, it works best in conjunction with a paired phone on the same ecosystem, but Cortana is still useful even if you're on Android or iOS.

By default, Cortana appears as a search box just next to the start button on the taskbar. From there, you can search your computer and the internet, just like in Windows 8. However, Cortana will also organise your appointments, giving you context-sensitive reminders based on time, place or contacts.

Through machine learning, Cortana utilises your search history and other data to learn more about your likes and dislikes, building an intelligent profile of what to suggest to you in its notebook.

Naturally, it's got voice-activation options via the hey Cortana' keyphrase and Microsoft claims that it can understand more natural speech patterns than competitors. It's a useful tool purely on desktop, but it'll be a downright essential once Windows 10 arrives on phones.

Twitter Moments

Twitter updated its Windows 10 app in December 2015 to include Moments, a feature that enables you to see the highest trending stories of the day.

Moments lets you browse conversations, video clips and pictures to check out the latest news relevant to you, and when you find a subject that piques your interest, you can follow it, with Twitter curating the best tweets about it to send to your feed.

Microsoft Word Mobile

Publisher: Microsoft Corporation

Price: Free

Most people may be more familiar with its full-fat Office cousin, but unlike OneNote, the app version of Microsoft Word is actually our favourite of the two.

Like with all the mobile-friendly Office apps, Word is a lot more slimmed down compared to the desktop version. However, while this means a loss of functionality for many apps, for word it actually results in a much more streamlined experience.

Some of the more fine-grained control is gone, but all the core options and elements remain intact. Font and paragraph options are present and correct, as well as change tracking and media insertion tools.

For the day-to-day needs of most users, the Word app is a perfect lightweight document editor, with a clean layout and a great set of cloud syncing tools to boot.

Xbox

Publisher: Microsoft Corporation

Price: Free

Consider this a must-have for any Xbox gamer. Through the Xbox app, Windows 10 now includes functionality for streaming games from an Xbox One directly to a PC, without the need for a high-end processor or graphics card.

Gamers no longer need to be confined to the bedroom or living room while playing their favourite games. Streaming to a Windows 10 laptop, games can be taken anywhere, as long as you're within range of your home WiFi network.

The app can also be used to capture game footage through the Game DVR system, then browsed and uploaded remotely. Acting as a Facebook-esque social hub for all your gaming activity, the Xbox app is a real leap forward for cross-platform support.

This article was originally created on 21 July 2015. It was last updated on 10 May 2019

Get the ITPro. daily newsletter

Receive our latest news, industry updates, featured resources and more. Sign up today to receive our FREE report on AI cyber crime & security - newly updated for 2024.

Bobby Hellard is ITPro's Reviews Editor and has worked on CloudPro and ChannelPro since 2018. In his time at ITPro, Bobby has covered stories for all the major technology companies, such as Apple, Microsoft, Amazon and Facebook, and regularly attends industry-leading events such as AWS Re:Invent and Google Cloud Next.

Bobby mainly covers hardware reviews, but you will also recognize him as the face of many of our video reviews of laptops and smartphones.