Best HR software

We round up the best HR tools, including software and mobile apps to keep on top of the HR in your organisation

HR needs to cover a whole range of functions within an organisation including employee management, talent acquisition, payroll, development and performance.

Similarly, HR software is a complicated area, with numerous products available to manage different elements of your business.

Here we have rounded up some of the best tools around to help your HR department (or managers) to choose the software which will allow you to carry out HR tasks with the least amount of fuss.

Namely

Namely covers all HR bases, including payroll, benefits and talent management, from one easy-to-use interface. It's not industry-specific, which may be a negative if you work in a specialised or highly-regulated industry, but on the flipside of the coin, it means it's a competent solution for any large business.

With a highly-configurable drag-and-drop UI, which can be perfectly matched to your business's policies and processes, it's definitely worthy of a mention in our top HR software list., purely because not many other options offer such a wealth of features and personalisation in one solution.

Its most useful asset is storing employee information securely - a major bonus with the General Data Protection Regulation (GDPR) coming into force - including the time off they've had from their allowance (plus any other absences), performance management, a calendar, task manager and a social news feed.

Get the ITPro. daily newsletter

Receive our latest news, industry updates, featured resources and more. Sign up today to receive our FREE report on AI cyber crime & security - newly updated for 2024.

The perhaps less endearing features are that it's quite expensive compared to competitors and will take a little longer to set up - but that's expected with HR software that's specifically tailored to your business, rather than an off-the-shelf product.

Although it has its uses for mid-size businesses, it's probably a little too on the pricey side for small firms and startups.

Bamboo HR

First up is Bamboo HR which covers an entire employee lifecycle and lets you manage everything related to employees. This could be applicant tracking for jobseekers, an employee database which keeps tabs on everyone in the business, absence and sickness monitoring, holiday and leave tracking, performance management and some benefits administration.

Best printers 2021: For all your printing, scanning and copying needs Best project management software

Bamboo HR's interface is extremely easy and simple to use and even provides an API to integrate it with other HR software you might have already. Despite this, this useful software comes at a price and is an expensive option compared to others that can do the same.

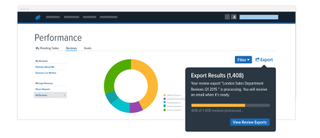

Sage People

Even though this is not one of the best-featured HR platforms which lacks a payroll admin or scheduling functionality, Sage People (formerly Fairsail HRMS) has a spot in our best HR software roundup since it supports an astounding number of users (up to 13,000) which means it's perfect for you if you have a large organisation. Additionally, it can be customised to an organisation's needs and is suited for international organisations too. Even though it might miss out on a couple of key elements, it does integrate with third-party platforms so it won't miss out on much thanks to this.

iCIMS Talent Platform

Mose HR platforms excel at one element, while other features are not so fully-focused. This is certainly the case with iCIMS which, as the name suggests, has recruitment and talent acquisition at its core. It really is the best in its category, with iCIMS Recruit, iCIMS Connect and iCIMS Onboard working together to ensure your organisation onboards the best candidates and retains them for their entire lifecycle at the organisation. Although it doesn't match up with the all-in-one solutions, it's definitely worth being mentioned in our roundup.

Kronos Workforce Ready

Kronos Workforce Ready is a modular HR system, giving the organisation the option to stitch together just the modules they need or implement the entire platform to cover everything a business would ever need to keep on top of their HR processes.

It covers recruiting, onboarding, performance management, compensation planning, time and attendance, scheduling, absence management and payroll, with all data updated in real time to make sure everyone is on the same page. A free mobile app allows HR staff to access everything when they're away from their desk too, so you won't have to keep staff waiting when they're waiting for information.

Workday

Workday offers all the core functions you'd expect from an all-in-one HR tool, from recruitment and talent acquisition to learning management, benefits admin and payroll services in one easy to use suite.

It also includes an employee database, including somewhere to store employee contracts and keep an eye on their progress at the organisation. Its mobile recruiting app is an excellent addition for recruiters, allowing them to find and approach potential candidates even if they're not at their desk.

Image source: Sage People, ICIMS, Bigstock, Workday

Zach Marzouk is a former ITPro, CloudPro, and ChannelPro staff writer, covering topics like security, privacy, worker rights, and startups, primarily in the Asia Pacific and the US regions. Zach joined ITPro in 2017 where he was introduced to the world of B2B technology as a junior staff writer, before he returned to Argentina in 2018, working in communications and as a copywriter. In 2021, he made his way back to ITPro as a staff writer during the pandemic, before joining the world of freelance in 2022.