Best project management software

We round up the top project management software to keep on top of tasks

Digital transformation has become increasingly important in the world of technology, and if going digital lends itself well to any part of your business operation, it's project management and collaboration that can benefit the most.

Why? Because digitising the project management process means everyone can share information and insights for projects, tracking progress, assigning tasks and making sure projects finish on time and to budget.

Although you may already be using shared files to share the load, using spreadsheets and word processing software just isn't an effective way of managing projects anymore. They're time intense and tracking changes can become complicated.

Switching to an all-in-one project management platform can take the burden off staff - whether they're in the project team or other employees that need access to project files. With most project management software, you can add individual tasks and assign them to users, add deadlines and then move the tasks through an approval process if need be. A kanban-board style tool like Trello allows you to just drag and drop tasks from one column to another, allowing everyone to see how it's progressing.

Choosing the right project management tool for your organisation can be a burden in itself. That's why we've collated the most effective project management tools to get you started.

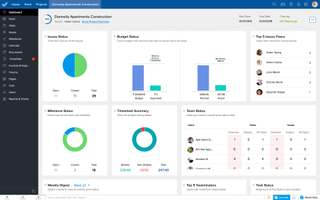

Zoho Projects

Zoho Projects is one of the most widely used project management platforms for small and medium-sized businesses. The premium version offers a whole spectrum of features and functionalities for users, allowing project managers to define tasks and assign them to teams and individuals, estimate project costs and follow up on tasks that haven't been completed yet.

What is excellent about Zoho is its document process automation, making it much faster to manage projects. For example, it will automatical track any changes to documents and revisions, access control and retrieval. One key feature is the built-in document sharing portal and issue management controls, so if a problem arises, it can be easily resolved by project managers before the project falls behind schedule.

There are five levels of subscription, from the free version for up to five users (with very limited functionality) up to $150 a month for an enterprise package that's suitable for up to 25 users (each extra person costs $5). This latter package adds a lot more into the mix, such as free templates, the ability to upload files of up to 120GB and lots of customisation options.

Price: From free (five users, limited functionality)

Main idea: Zoho Projects is such a popular platform because it offers lots of flexible features at a reasonable price point. Its UI is simple to use and managers have complete visibility of every project.

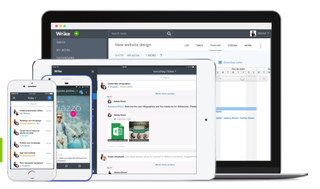

Wrike is a cloud-based project management suite, which is quick to set-up and easy for everyone to use, whatever their level of project management training. Wrike is one of the more expensive project management suites we examine in this feature, but that's because it's so simple to use. It allows you to track time too, making it a perfect solution for projects that are billed per-task, for example.

There is also a load of social collaboration tools built into Wrike and users can track their own tasks from a single dashboard. Other features Wrike supports include assigning tasks, tracking deadlines and schedules so you always have a real-time overview of how a project is developing.

Price: From free (five users, limited functionality)

Main idea: A great tool for getting up and running quickly, with the ability to see an overview of a project from a single dashboard

Trello

Trello is one of the many project management tools that use a Kanban-board like drag and drop interface for managing projects. Each project has its own board, while tasks are cards, These progress across columns as the project progresses, with each column a stage in the process.

You can assign tasks to others in the team and add labels to make it clear where they fit in the project. There's a wide range of integrations in Trello, including Google Drive for document storage, Slack for instant communication and the ability and you can set deadlines for tasks too.

We use Trello on IT Pro to help manage projects and our content output and are, collectively, pretty impressed by its intuitive UI and ease of use. It really doesn't take much time at all to get up to speed with what's what and how it all works. There's also something really comforting about having a 'clear' in progress Trello list at the end of the day/week/project.

Price: From free

Main idea: A very visual project management tool that's simple to use and boasts a huge array of integrations

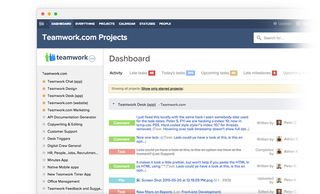

TeamWork Projects

The 2017 edition of TeamWork Projects comprises lots of tools for project managers and teams to keep tabs on how projects are progressing. You can create task lists, track how long it takes to complete a task, upload files for everyone to access and message other team members. Project managers are also able to assign tasks to people and track their progress from one place, while account managers can bill clients based on the time inputted by everyone involved in the project.

TeamWork Projects doesn't have the most attractive UI, but its feature set make up for this and it's now becoming one of the most widely used platforms to help organisations keep up to date with everything happening in their business.

Price: From $49/month

Main idea: With detailed time tracking features and direct client billing, TeamWork Projects is perfect for businesses that need to bill clients regularly

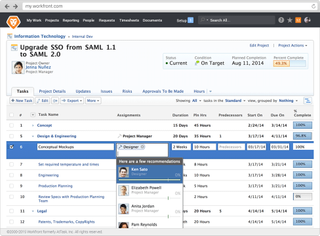

Workfront

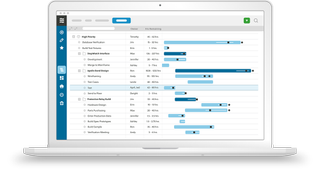

Formerly AtTask, Workfront puts a focus on task prioritisation, helping businesses make sure the most important elements of a project are completed first. Everything can be presented in a visual report so everyone in a team can view the work lifecycle. Project leads can assign work, track deadlines, check status updates and approve work, with collaboration features to comment on submissions too.

It uses a drag and drop interface for assigning different team members tasks and moves the priority of tasks around, making it simple for anyone to use, whether an organisation is using Agile, Waterfall or an alternative project management methodology to complete projects.

Price: From $30/month

Main idea: An all-in-one project management tool, suitable for use with any methodology

LiquidPlanner

LiquidPlanner claims to be the only project management solution that is dynamic enough to help teams in fast-moving environments. The three main tenets of the solution are:

- Focus on the priorities

- Adapt to changes

- Visualise the impact of proposed changes before rather than after the fact

It has a number of features included that are designed to deliver on its dynamic promise, including smart schedules, resource management and contextual collaboration.

The main premise of the software is the acknowledgement of the fact that projects evolve and need tools that can evolve in line with the changes and knock-on changes required.

Price: $9.99 - $69 per user/per month depending on the size of your business

Main idea: Good teams plan. Great teams execute

Get the ITPro. daily newsletter

Receive our latest news, industry updates, featured resources and more. Sign up today to receive our FREE report on AI cyber crime & security - newly updated for 2024.

Maggie has been a journalist since 1999, starting her career as an editorial assistant on then-weekly magazine Computing, before working her way up to senior reporter level. In 2006, just weeks before ITPro was launched, Maggie joined Dennis Publishing as a reporter. Having worked her way up to editor of ITPro, she was appointed group editor of CloudPro and ITPro in April 2012. She became the editorial director and took responsibility for ChannelPro, in 2016.

Her areas of particular interest, aside from cloud, include management and C-level issues, the business value of technology, green and environmental issues and careers to name but a few.