Best antivirus for Windows 10

Want to stay secure? Here's the best business-class protection around

Cyber attacks are the one of the biggest threats to the safety and finances of any business in today’s world. Hackers are often indiscriminate in their targeting and will take anything and everything they can grab before disappearing forever.

This is why having an effective antivirus product running at all times is critical to ensure important documents, data, and other sensitive information remains yours and yours alone – and where better to start than with our list of some of the best antivirus tools for Windows 10.

There are numerous options on the market and it can be difficult to decide which one is best for any single organisation. Each offers different features and most importantly, they can offer varying levels of protection, often measured by the quality of the programs’ detection and removal rates of malicious cyber threats.

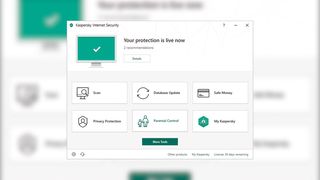

Kaspersky Internet Security 2020

One of the oldest names in the antivirus game, Kaspersky regularly appears in all the ‘top’ lists for good reason. The company has a longstanding pedigree and a strong record in producing effective, accurate solutions that keep cyber security threats away from the PCs it protects.

The company releases new versions every year to ensure the latest vulnerabilities and exploits are accounted for, and to be certain that the program works with the latest software that may be installed on anyone’s computer.

Being headquartered in Russia has affected the company’s reputation somewhat, given recent events, and leading national security authorities have publicly raised concerns over the West’s use of its software. That said, the allegations that have been made have been backed with little to no evidence and Kaspersky assures that there is no risk in using its products.

System requirements

- Windows 7 or above

- macOS 10.12-12

- Android 5-12

- iOS 12-15

- Internet connection

- 2.7GB storage

- 1GHz processor

- 2GB memory

We have yet to review the 2022 version of Kaspersky’s AV product but its 2021 version received top marks in our tests.

Get the ITPro. daily newsletter

Receive our latest news, industry updates, featured resources and more. Sign up today to receive our FREE report on AI cyber crime & security - newly updated for 2024.

Price: £20.49 (introductory price)

Bitdefender Internet Security 2020

Bitdefender's AV software rarely changes much from year to year, but that's okay because each version is usually outstanding. The user interface is bright, colourful, and intuitive, and the AV suite features solid ransomware protection too. It can also store your most important files in special folders which trusted applications will be able to access as usual, while everything else gets firmly locked out.

System requirements:

- Windows 7 or above

- 2.7GB storage

- 2GB memory

Price: £29.99

You can read our review of Bitdefender Internet Security from 2021 here.

McAfee Total Security

McAfee's previous "Internet Security" platform has recently been replaced by Total Security, an AV suite that works across works for Mac, PC & mobile devices. As well as providing internet security functionality, the software offers anti-phishing protection, online backup to help avoid data deletion, a feature that helps you to detect and prevent any unauthorized access or connection from your device to internet and URL shortening.

A McAfee Total Security subscription also provides access to the company's secure VPN, which provides bank-grade encryption to keep your personal information and online habits protected.

System requirements:

- 500MB free hard drive space

- 1GHz processor

- 2GB RAM

- Internet connection

Price: £29.99.

We're yet to publish our McAfee Total Security review, but you can read our full McAfee Internet Security 2019 review for more information.

Panda Free Antivirus

Panda has long been one of the favourites in the free AV market and this year's version still has a great range of security features and returns with a much-improved user interface compared to past, clunkier offerings.

How effective is it? In some ways, that's really up to you. While its 100% threat detection rate is certainly something to shout about, the 1.6% false-positive rate scores as one of the worst we've seen, only beating Windows Defender. This won't be too much of an issue for technically-aware users but knowing when to leave a file quarantined and when to mark it as safe could be difficult to determine for relative newbies.

System requirements:

- 200MB free hard drive space

- 300MHz Pentium processor

- 128MB RAM

- Internet connection

Price: Free

Read our full Panda Free Antivirus review for more information.

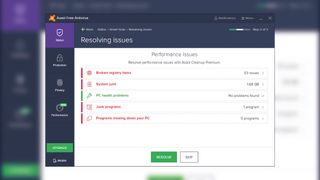

Avast Free Antivirus

Avast has always been a reliable bet when pursuing a free antivirus application. Its threat detection has always been up to scratch, as it is with this year's version. It also boasts a near-unrivalled false positive rate, beaten only by the paid Bitdefender, ESET and Kaspersky software. For these reasons, Avast is well worth a look for the cash-strapped and security-conscious user.

It's perhaps not one for the easily riled, though. While it does sport some extensive features to back-up its performance and an intuitive UI, the frequent popups encouraging you to buy the upgraded version are far too pervasive. The orange lock symbols over the feature icons also account for an estimated 50% of all features and annoyingly, a scan doesn't just check for malware, but also small and often insignificant 'performance issues'.

System requirements:

- 1.5GB free hard drive space

- Intel Pentium 4 / AMD Athlon 64 processor

- 256MB RAM

- Internet connection

Price: Free

Read our full Avast Free Antivirus review for more information.

Carly Page is a freelance technology journalist, editor and copywriter specialising in cyber security, B2B, and consumer technology. She has more than a decade of experience in the industry and has written for a range of publications including Forbes, IT Pro, the Metro, TechRadar, TechCrunch, TES, and WIRED, as well as offering copywriting and consultancy services.

Prior to entering the weird and wonderful world of freelance journalism, Carly served as editor of tech tabloid The INQUIRER from 2012 and 2019. She is also a graduate of the University of Lincoln, where she earned a degree in journalism.

You can check out Carly's ramblings (and her dog) on Twitter, or email her at hello@carlypagewrites.co.uk.