How to buy remote access software for business

Isn't it time you improved how you support remote staff? The latest tools save time and ensure employees can stay productive

The past three years have dramatically changed the way we do business and, despite initial misgivings, many companies are now firmly set on the path to flexible working. This can be a great boost to productivity, but for it to work properly, companies need to provide effective remote support software regardless of whether their staff is in the office, on the road, or working from home.

The good news is the answer has been around for a while: remote access software is a mature technology that's helped IT teams for decades. Why waste time on unproductive and frustrating phone calls or expensive site visits when support technicians can put themselves in front of a user's computer without leaving the comfort of their desk – or even their own home?

The biggest decision facing SMBs is choosing the right product, as there's a wide variety of solutions available. For this article, we recommend looking at four affordable remote support products that we have reviewed: IDrive, LogMeIn, NetSupport, and Wisemo. We have tested these on-premises, cloud-hosted, and hybrid solutions to help you decide which one will make your support department's life a lot easier.

Remote access software

Remote support products require an agent loaded on client systems to allow technicians to access them, and there are two distinct methods of achieving this. For instant access in times of trouble, technicians can use on-demand or attended, access where the user is present at their desk.

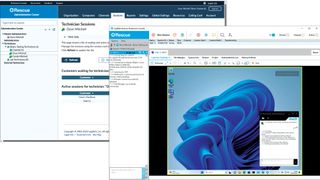

From their web portal or personal console, the technician sends a request to the user with the message containing a unique session PIN and a download link for a temporary agent. A session only starts once both sides have agreed to join and, on completion, the agent software is automatically removed without trace from the remote system.

There will be times when technicians need to access unattended systems and for this, a different agent is used which is installed permanently. Running in the background, an unattended agent stays connected to the remote support service, allowing technicians to access the system as and when required.

Cloud vs. on-prem

Cloud-hosted remote support is ideal for small businesses with a mix of office-based and remote workers. They're simple to deploy and their subscription-based licensing makes it easy to control costs.

Get the ITPro. daily newsletter

Receive our latest news, industry updates, featured resources and more. Sign up today to receive our FREE report on AI cyber crime & security - newly updated for 2024.

Technicians are provided with a personal web portal account to access the service, allowing them to open support sessions from anywhere on the internet. Users require minimal training, as in most cases they just need to follow the instructions in the email sent to them by the technician.

On-premises support solutions are great for businesses that don't want to rely on a third-party provider and need greater control over security and access. Most require an on-site central management server, but don't let this put you off: their hardware requirements are minimal and the on-premises product we tested took only ten minutes to install.

Originally developed to support systems on the same local network, most on-premises solutions now include a gateway component that extends secure access to remote workers. If you want the best of both worlds, consider a hybrid solution that teams up an on-premises console and a cloud portal allowing you to support local systems on the LAN and use the internet to access remote ones.

Remote access security

Security must be a top priority when choosing a remote access software solution as it can be extremely dangerous in the wrong hands. If it isn't configured correctly, it can punch a hole clean through your perimeter defenses and allow intruders to access systems as though they were sitting in front of them.

First, standardize on a single remote support product and ensure all your staff know which one you're using. If a scammer can find your email address, they can easily send a bogus support request from another product in an attempt to gain control.

A common attack vector is the use of trial versions of support software. Fortunately, most vendors have wised up to this and either block on-demand access in trial versions or warn users that the invitation may not be a bona fide request.

Choose products that can enforce multiple authentication methods for support sessions, including unique access codes, password protection, and support for services such as Active Directory. On the technician side, activate two-factor authentication (2FA) to further protect access to their portal and make sure the product employs AES 256-bit encryption for all communications between technicians and users to prevent eavesdropping.

Free remote access tools such as Chrome Remote Desktop and the Windows Quick Assist apps should be avoided in a business environment as they're impossible to manage and audit. For the same reasons, you shouldn't allow Remote Desktop Protocol (RDP) in the workplace.

Remote access software tools

Check what support tools are included as these will improve the support experience. Naturally, all products provide remote control but other valuable features are file transfer, screen sharing, note taking, remote registry editors, and audio, video, and chat services.

Workstation inventory speeds up troubleshooting as technicians can see what is installed on the user's computer before starting a support session. Session recording can create a video audit trail of support sessions, which can also be used for training purposes.

Most products focus on Windows endpoints, but if you have a mix of desktop devices, check what other platforms they support; most offer agents for macOS and Linux. Mobile support is an essential requirement, too, and you should expect to see technician and client apps for Android devices. You can't remotely control iOS devices due to the operating system's strict security model but, along with technician apps, some products offer facilities to broadcast screen contents and stream live video and audio to a technician.

In today's hybrid working environments, a properly configured remote support solution can pay dividends in terms of increased efficiency and productivity. All of the products we have reviewed offer a wealth of support services and tight security, so read on to see which one will best suit your business working model.

Dave is an IT consultant and freelance journalist specialising in hands-on reviews of computer networking products covering all market sectors from small businesses to enterprises. Founder of Binary Testing Ltd – the UK’s premier independent network testing laboratory - Dave has over 45 years of experience in the IT industry.

Dave has produced many thousands of in-depth business networking product reviews from his lab which have been reproduced globally. Writing for ITPro and its sister title, PC Pro, he covers all areas of business IT infrastructure, including servers, storage, network security, data protection, cloud, infrastructure and services.